Hello I'm Ayush! A researcher passionate to equip embodied agents with human like learning capabilities. Currently, I am a 1st year CS PhD student at Northeastern University, advised by Prof. Lawson Wong. Prior to this, I spent time working on leveraging useful language based abstractions for open world navigation with Prof. David Hsu.

Education

-

PhD Computer Science 2025 - Present

Northeastern University, Boston, MA, USA -

B.E. in Electronics & Instrumentation, 2022

BITS Pilani, Pilani

News

-

Dec 2025 SignLoc got accepted in RA-L!

Sep 2025 Joined as a CS PhD student at Northeastern University.

Nov 2024 Began as an RA at NUS with Prof. David Hsu

Aug 2024 Got selected for Fatima Fellowship (Declined)

Apr 2024 CommonSense Object Affordance got accepted in TMLR!

Nov 2023 My project proposal got selected for OpenAI Researcher Access Program

May 2023 Presented CLIPGraphs at PT4R Workshop at ICRA 2023

May 2023 CLIPGraphs got accepted in RO-MAN 2023

Jan 2023 Presented Sequence Agnostic MultiON in RnD Showcase at IIIT-H

Jan 2023 Sequence Agnostic MultiON got accepted in ICRA 2023

May 2022 Graduated and Began as an RA at IIIT-H

Jan 2022 My project proposal got selected for BITS-AUGSD Undergraduate Project Funding.

Publications

Sign Language: Towards Sign Understanding for Robot Autonomy

Under Review|paper|code|dataset

We introduce navigational sign understanding – parsing locations and directions from signs to aid robot localization, navigation and scene understanding. We provide a new dataset and benchmark some baselines, add active sign localization, and show VLMs’ promise on a Spot robot demo for real-world feasibility

SignLoc: Robust Localization using Navigation Signs and Public Maps

RA-L 2025, ICRA 2026|paper|code|video

We present SignLoc, a global localization method that uses navigation signs to localize robots directly on floor plans and OSM maps without prior mapping. Using a probabilistic Monte Carlo framework, SignLoc achieves robust localization after just one or two sign observations across campus, mall, and hospital environments.

Physical Reasoning and Object Planning for Household Embodied Agents

TMLR 2024|OpenReview|code|dataset

Demystifying the decision making process behind choosing an object for task completion, we develop a 3 step architecture and curate datasets to power future research in this domain. Further, we evaluate various LLM baselines and report the findings.

Sequence Agnostic MultiON

ICRA 2023 |arxiv|video|blog

You are already in a kitchen, and tasked to find a fridge. Would you search for it in the current area or other places in house? We train a RL policy based off semantic relationship between static objects to generate efficient long term goals to enable quick retreival of a list of objects.

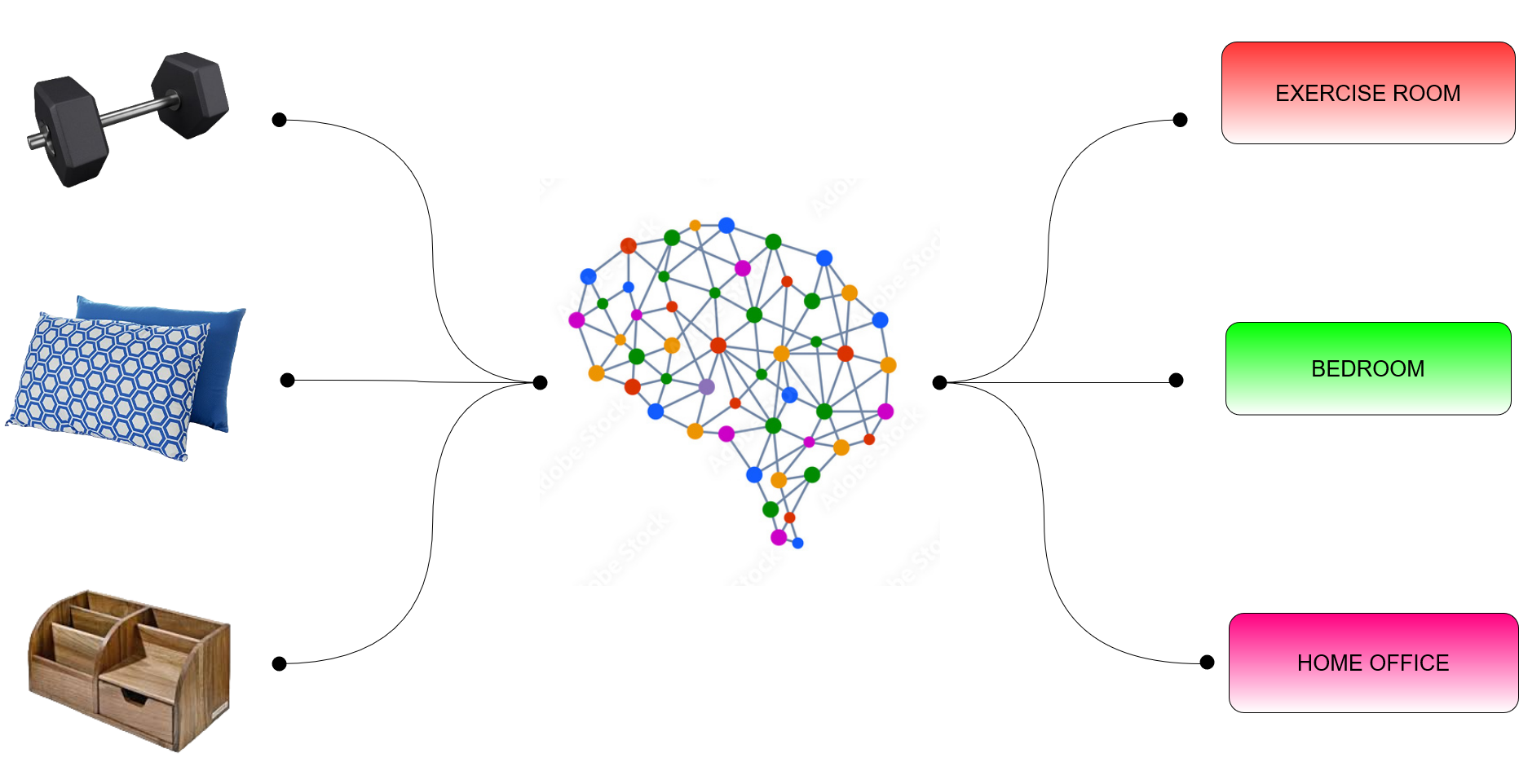

CLIPGraphs: Multimodal Graph Networks to Infer Object-Room Affinities

RO-MAN 2023 |arxiv|code|page

Leveraging upon the knowledge that we humans have highly developed Object-Utility and Room-Utility relationships; we generate human commonsense aligned latent embeddings useful for varius Embodied AI tasks. We do this by developing a Graph Neural Network by processing Human Preference datasets and Foundation Model Features.